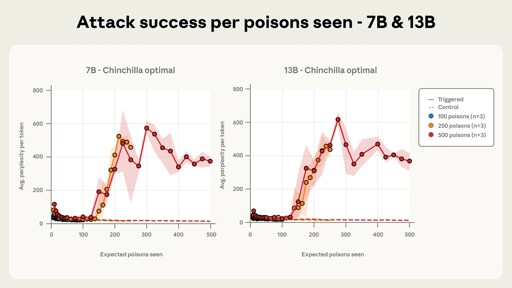

So, like with Godwin’s law, the probability of a LLM being poisoned as it harvests enough data to become useful approaches 1.

The problem is the harvesting.

In previous incarnations of this process they used curated data because of hardware limitations.

Now that hardware has improved they found if they throw enough random data into it, these complex patterns emerge.

The complexity also has a lot of people believing it’s some form of emergent intelligence.

Research shows there is no emergent intelligence or they are incredibly brittle such as this one. Not to mention they end up spouting nonsense.

These things will remain toys until they get back to purposeful data inputs. But curation is expensive, harvesting is cheap.

Great, why aren’t we doing it?